Binary Search Tree Worst Case Time Complexity

News Leon

Apr 02, 2025 · 6 min read

Table of Contents

Binary Search Tree: Worst-Case Time Complexity Deep Dive

The Binary Search Tree (BST) is a fundamental data structure in computer science, renowned for its efficiency in search, insertion, and deletion operations. However, its performance isn't uniformly excellent. While boasting an average time complexity of O(log n), where 'n' is the number of nodes, the BST's worst-case scenario can dramatically degrade performance to O(n). Understanding this worst-case time complexity is crucial for leveraging BSTs effectively and anticipating potential performance bottlenecks. This article provides an in-depth exploration of the worst-case time complexity of BSTs, examining the scenarios that trigger it, its implications, and strategies to mitigate its negative impact.

Understanding the Idealized BST and its Logarithmic Performance

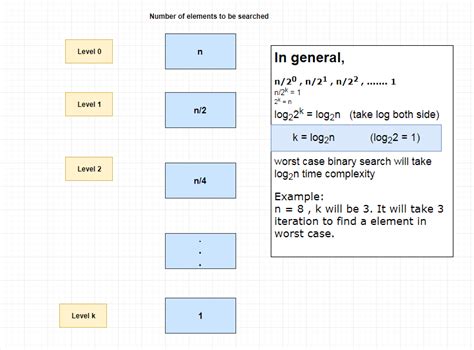

Before diving into the worst-case, let's refresh our understanding of how a balanced BST achieves its optimal O(log n) time complexity. In a perfectly balanced BST, each node (except leaf nodes) has two children, and the height of the tree is approximately log₂(n). This balanced structure allows for efficient searching, insertion, and deletion.

-

Search: When searching for a specific value, the algorithm starts at the root node and compares the target value with the node's value. If they match, the search is successful. If the target is smaller, the algorithm recursively searches the left subtree; otherwise, it searches the right subtree. This logarithmic process rapidly narrows down the search space.

-

Insertion: Similar to searching, insertion involves traversing the tree to find the appropriate position for the new node, maintaining the BST property (left subtree < node < right subtree).

-

Deletion: Deletion is slightly more complex, but the fundamental principle remains – traversing the tree to locate the node and adjusting the tree structure to maintain the BST property after removal. The process can involve finding a successor or predecessor node.

In all three operations, the number of comparisons required is proportional to the height of the tree, which is approximately log₂(n) in a balanced BST. This is why a balanced BST boasts O(log n) time complexity for these operations.

The Worst-Case Scenario: The Degenerate Tree

The worst-case time complexity of O(n) arises when the BST becomes highly unbalanced, degenerating into a linear structure resembling a linked list. This happens when the input data is already sorted or nearly sorted in ascending or descending order.

Imagine inserting the numbers 1, 2, 3, 4, 5, ... sequentially into a BST. Each subsequent number will become a right child of the preceding one, creating a right-leaning, skewed tree. This results in a tree where the height is equal to the number of nodes (n), transforming the logarithmic search into a linear one.

Visual Representation of a Degenerate BST:

1

\

2

\

3

\

4

\

5

In this degenerate tree, searching for the number 5 requires traversing all 5 nodes. The same applies to insertion and deletion. This linear traversal results in the O(n) worst-case time complexity.

Why is this a problem?

The O(n) time complexity obliterates the efficiency advantages of a BST. In large datasets, operations become incredibly slow, rendering the BST less efficient than simpler linear data structures like linked lists. This makes it crucial to detect and address this issue.

Analyzing Operations in a Degenerate BST

Let's explicitly analyze the time complexity of each operation in a degenerate BST:

-

Search: In the worst case, you might have to traverse all 'n' nodes to find the target value, leading to O(n) time complexity.

-

Insertion: Inserting a new node also requires traversing the entire tree to find its appropriate position, resulting in O(n) time complexity.

-

Deletion: Similar to insertion, deleting a node requires traversing the entire tree, leading to O(n) time complexity.

Factors Contributing to Degenerate BSTs

Several factors can lead to the formation of degenerate BSTs:

-

Sorted or Nearly Sorted Input: The most common cause is inserting data that is already sorted or nearly sorted. This sequential insertion leads to an unbalanced structure.

-

Lack of Self-Balancing: Standard BST implementations don't inherently possess self-balancing mechanisms. They rely on the input data's randomness to maintain a reasonably balanced structure.

-

Poor Data Distribution: If the data distribution is heavily skewed, resulting in an unequal number of nodes in the left and right subtrees, the tree can become unbalanced.

Mitigation Strategies: Self-Balancing BSTs

The key to avoiding the worst-case scenario is employing self-balancing BSTs. These advanced data structures automatically adjust their structure during insertions and deletions to maintain a balanced tree, ensuring logarithmic time complexity. Some popular examples include:

-

AVL Trees: AVL trees maintain balance by ensuring that for each node, the height difference between its left and right subtrees is at most 1.

-

Red-Black Trees: Red-black trees use a color-based system (red and black nodes) to enforce balance, allowing for a slightly less stringent balance condition than AVL trees, resulting in potentially faster insertion and deletion operations.

-

B-Trees: B-Trees are particularly useful for disk-based storage because they minimize the number of disk accesses. They are balanced and maintain a specific order and structure for nodes.

-

2-3 Trees and 2-3-4 Trees: These are self-balancing tree data structures that guarantee logarithmic time complexity for search, insertion, and deletion.

These self-balancing BSTs implement sophisticated rotation and recoloring algorithms to ensure that the tree remains relatively balanced despite insertions and deletions. This prevents the tree from degenerating into a linear structure and maintains the desirable O(log n) time complexity.

Choosing the Right BST Implementation

The choice of which BST implementation to use depends on several factors including:

-

Frequency of Operations: If you anticipate a high frequency of insertions and deletions, a red-black tree or AVL tree might be preferred due to their relatively efficient rebalancing algorithms.

-

Storage Requirements: Consider the memory overhead associated with each self-balancing algorithm. AVL trees can have slightly higher overhead compared to red-black trees.

-

Specific Application Requirements: The choice will depend on the needs of your specific application, such as needing a tree that performs exceptionally well under highly skewed insertion patterns.

For many applications, standard BSTs combined with careful consideration of data input are sufficient, while others might necessitate the overhead of a self-balancing algorithm to ensure consistently efficient performance.

Conclusion

The worst-case time complexity of O(n) for a Binary Search Tree is a critical consideration. While the average-case logarithmic performance is highly attractive, the potential for degeneration into a linear structure cannot be ignored. Understanding the factors leading to degenerate trees and employing self-balancing alternatives such as AVL trees, red-black trees, or others, is essential for creating robust and efficient data structures that consistently deliver on their promise of logarithmic time complexity for search, insertion, and deletion operations. By carefully selecting and implementing the appropriate BST variant, developers can ensure their applications maintain optimal performance even under demanding circumstances. This understanding of the complexities inherent in BST implementation allows for informed choices and prevents potential performance pitfalls in projects requiring efficient data handling. Remember, proactive mitigation is key to avoiding the performance limitations of a degenerate BST.

Latest Posts

Latest Posts

-

True Or False All Whole Numbers Are Rational Numbers

Apr 03, 2025

-

What Is The Product Of Any Integer And 1

Apr 03, 2025

-

All The Following Are Threats To Biodiversity Except

Apr 03, 2025

-

Electric Field Of A Sphere Formula

Apr 03, 2025

-

Can A Diverging Lens Produce A Real Image

Apr 03, 2025

Related Post

Thank you for visiting our website which covers about Binary Search Tree Worst Case Time Complexity . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.